A GPU cache is a small, fast memory inside a Graphics Processing Unit (GPU) that stores frequently used data. It helps speed up processing by reducing the need to access slower main memory, improving performance in gaming, video editing, and AI tasks.

Understanding Cache Memory:

What is Cache Memory:

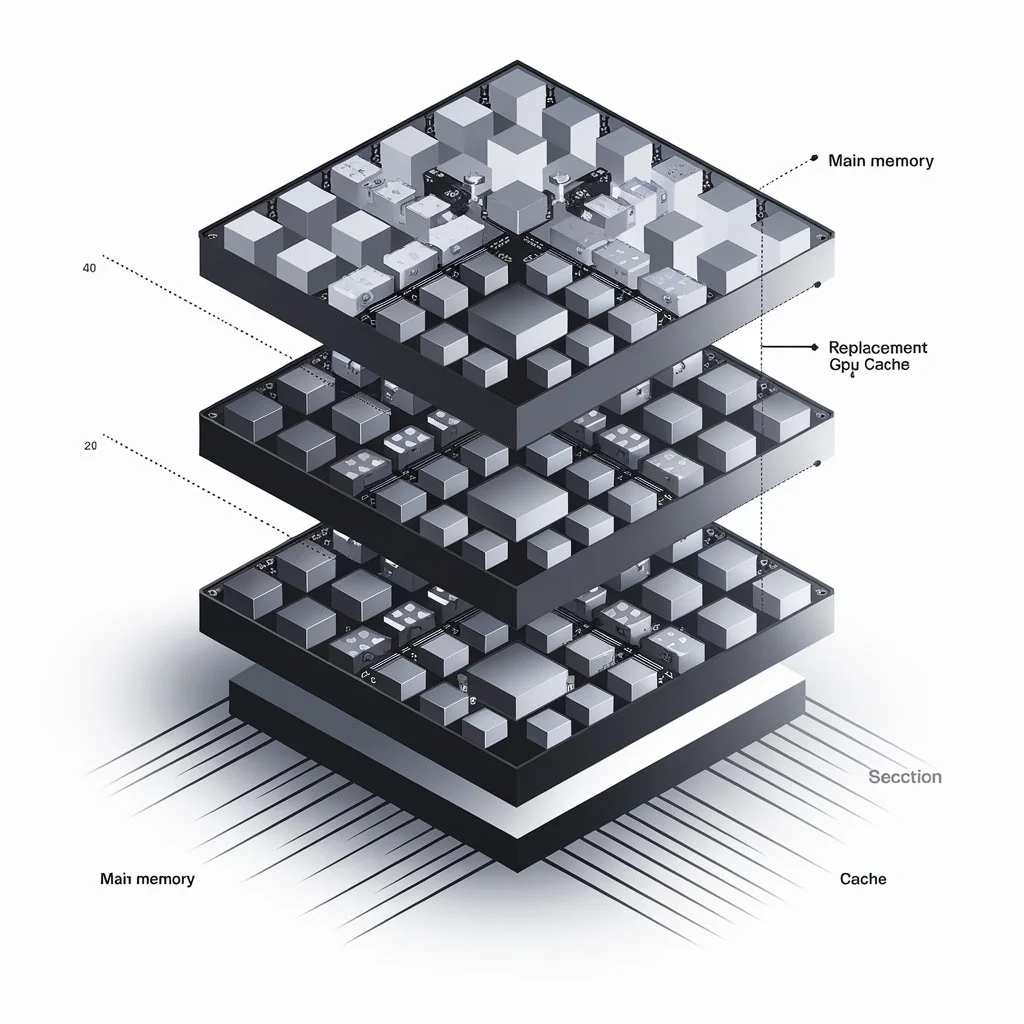

Cache memory is a small, high-speed storage area inside a processor that stores frequently accessed data. This reduces the time needed to fetch data from the main memory (RAM or VRAM in the case of GPUs).

1. How Cache Memory Works:

When the GPU processes data, it first checks if the required data is in the cache. If it is, it retrieves it quickly (cache hit). If not, it fetches it from the slower main memory (cache miss), leading to increased latency.

Types of Cache in a GPU:

L1 Cache

L1 is the smallest and fastest cache, located directly inside GPU cores. It stores critical data for immediate processing.

L2 Cache

L2 cache is larger than L1 and bridges L1 and the VRAM, improving data retrieval efficiency.

L3 Cache (If Applicable)

Some modern GPUs, like AMD’s RDNA 2 and RDNA 3 architectures, feature an additional L3 cache layer to enhance performance further. AMD calls this Infinity Cache, which improves bandwidth efficiency by reducing the need to access slower VRAM frequently.

Why Do GPUs Need Cache:

Speeding Up Computations:

GPU cache minimizes data retrieval time, ensuring real-time processing of high-resolution graphics.

1. Reducing Memory Latency:

With cache, GPUs don’t have to rely solely on VRAM, reducing delays in accessing critical data.

2. Efficient Parallel Processing:

Since GPUs handle thousands of threads simultaneously, having a cache allows multiple cores to access frequently used data without repeatedly fetching it from VRAM.

How GPU Cache Works;

When rendering a game scene or running AI algorithms, the GPU cache stores frequently used textures, shaders, or neural network weights to speed up processing. It acts as a buffer, reducing dependency on the slower VRAM.

GPU Cache vs CPU Cache:

Key Differences:

While both caches serve similar purposes, GPU cache is optimized for parallel computing, whereas CPU cache focuses on sequential tasks.

Which One is More Important:

For gaming and AI, GPU cache is crucial. For general computing, CPU cache takes precedence.

Impact of Cache on Gaming Performance:

A well-optimized GPU cache helps reduce lag, improve FPS, and enhance texture streaming. Modern games with high-resolution assets benefit significantly from faster cache architectures like AMD’s Infinity Cache.

Impact on AI and Machine Learning:

GPU cache plays a significant role in neural networks, allowing faster computation and improved efficiency in training AI models. NVIDIA’s Tensor Cores rely on cache for optimized AI processing.

Cache Optimization Techniques in GPUs:

Techniques like better load balancing, prefetching algorithms, and efficient memory management help GPUs maximize cache utilization.

Challenges with GPU Cache:

Issues like cache coherency, bandwidth limitations, and cache thrashing can impact GPU performance, requiring efficient memory hierarchy design.

Future of GPU Cache Technology:

AI-driven cache management, stacked memory architectures, and advanced compression techniques will shape the future of GPU caching.

How to Monitor and Improve GPU Cache Usage:

Tools like NVIDIA’s Nsight, AMD’s Radeon Software, or Intel’s Graphics Performance Analyzer can help analyze and optimize cache performance.

GPU Brands and Their Cache Technologies:

NVIDIA: Features L1, L2, and Tensor Caching for AI and gaming

AMD: Introduced Infinity Cache to reduce VRAM dependency and improve bandwidth

Intel: Focuses on efficient cache allocation in Arc GPUs, optimizing data flow

Common Misconceptions About GPU Cache:

Many believe that more cache always means better performance, but architecture and optimization are just as crucial.

GPU Cache vs. CPU Cache: What’s the Difference:

| Feature | GPU Cache | CPU Cache |

| Purpose | Optimized for parallel processing | Optimized for general computation |

| Size | Smaller but faster | Larger and hierarchical |

| Use Case | Graphics and AI | General computing tasks |

Common Issues with GPU Cache

Cache Thrashing

Occurs when too much data competes for limited cache space, leading to inefficiencies.

1. Stale Data and Performance Drops:

Old, unused data in the cache can slow down performance if not properly managed.

How to Optimize GPU Cache Performance:

Driver Updates and Tweaks:

Keeping GPU drivers updated ensures the cache system is working at peak efficiency.

1. Overclocking Considerations:

Overclocking the GPU can sometimes improve cache performance but also increases heat output

Benefits of GPU Cache in Gaming and AI:

Faster Frame Rendering:

Games run at higher FPS (frames per second) when a GPU can quickly retrieve necessary textures and instructions.

1. Smoother Gameplay:

Less stuttering and lag occur when a GPU has an efficient caching system.

AI and Machine Learning Applications:

GPU cache helps speed up AI tasks like image recognition and real-time data processing.

GPU Cache vs. VRAM: Understanding the Difference

| Feature | GPU Cache | VRAM |

| Speed | Extremely fast | Slower than cache |

| Size | Small (MBs) | Large (GBs) |

| Function | Temporarily stores frequently accessed instructions | Holds textures, shaders, and other assets |

| Latency | Very low | Higher compared to the cache |

Advantages of GPU Cache:

Faster Image Processing:

With faster data retrieval, rendering times in games, video editing, and 3D modeling are significantly improved.

1. Reduced Lag in Gaming

Games load and render more smoothly by storing shaders and textures in the cache, reducing frame drops.

2. Optimized AI & Deep Learning Tasks:

Machine learning models rely on large-scale computations. A well-designed GPU cache accelerates training and inference processes.

3. Energy Efficiency:

Fetching data from VRAM requires more power. By utilizing cache, GPUs reduce energy consumption while maintaining peak performance.

How to Maximize GPU Cache Performance:

Update GPU Drivers Regularly

GPU manufacturers release updates that enhance cache efficiency and performance.

1. Optimize Graphics Settings:

Lowering memory-intensive settings reduces cache overload and improves efficiency.

2. Avoid VRAM Bottlenecks:

Using too many high-resolution assets can strain VRAM and limit cache effectiveness.

3. Monitor Overclocking Impact:

While overclocking boosts speed, it may increase cache latency if not managed properly.

How Major GPU Brands Utilize Cache:

NVIDIA GPUs

- Uses L1, L2, and sometimes L3 caches for real-time performance optimization.

- RTX series features advanced memory management for AI and gaming.

1. AMD GPUs

Infinity Cache improves bandwidth while reducing latency.

Found in RX 6000 and newer GPU series.

FAQ’s

- What is GPU Cache?

GPU cache is a small, fast memory inside the GPU that stores frequently used data to speed up performance and reduce delays in tasks like gaming and AI.

- How does GPU Cache help in gaming?

The GPU cache helps games run smoothly by reducing lag and improving FPS by quickly accessing textures and shaders.

- What is the difference between GPU Cache and VRAM?

The GPU cache is faster and smaller, storing instructions, while VRAM is slower and larger, holding textures and other assets.

- What are the types of cache in GPUs?

GPUs typically have L1, L2, and sometimes L3 cache, each with varying sizes and roles in improving data access speed.

- How can I improve GPU cache performance?

To keep the cache running efficiently, regularly update your GPU drivers, adjust graphics settings, and avoid overloading it.

Conclusion:

GPU cache is key in improving performance by reducing memory delays and speeding up data access, especially in gaming and AI tasks. By optimizing cache usage, users can achieve smoother gameplay, faster rendering, and efficient machine learning processes

Read Also:

Is Rust CPU or GPU Heavy-A Complete Guide 2025!

Is 50 Celsius Hot for a GPU-A Complete Guide 2025!

GPU Power Consumption Drops – A Complete Guide 2025!